建站

硬件资源

| 公网ip | 私网ip | 系统 | 配置 |

|---|---|---|---|

| 102.106.104.206 | 172.21.0.7 | OpenCloudOS Server 8 | 4核 16G内存 50G系统盘+100G 高性能云硬盘 |

目标

- K8S

- Ingress

- Kuboard

- Docker

- Dcoker Compose

- MySQL

- Typecho

准备

安全组设置

| 协议 | 端口号 | 备注 |

|---|---|---|

| TCP | 80 | 网站访问端口 |

| TCP | 443 | HTTPS访问端口 |

| TCP | 3306 | MySQL端口号 |

| TCP | 10081 | Kuboard |

| UDP | 10081 | Kuboard |

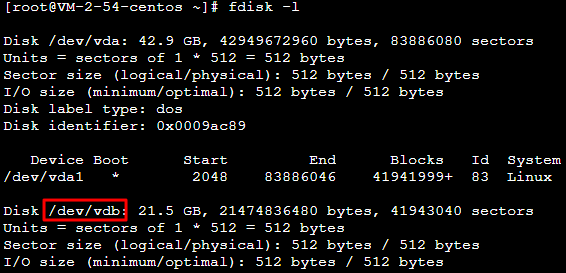

硬盘挂载

云商控制台挂在硬盘到实例

硬盘初始化

# 查看硬盘

fdisk -l

# 格式化硬盘

mkfs.ext4 /dev/vdb

# 将该磁盘挂载到 /data 挂载点

mount /dev/vdb /data

# 腾讯云挂载的硬盘在服务器重启后不能自动挂载,执行以下命令开启硬盘自动挂载

cp -r /etc/fstab /home

vi /etc/fstab

# 光标移至文件末尾

<设备信息> <挂载点> <文件系统格式> <文件系统安装选项> <文件系统转储频率> <启动时的文件系统检查顺序>

# 以使用弹性云硬盘的软链接自动挂载为例,结合前文示例则添加:

/dev/disk/by-id/virtio-disk-drkhklpe /data ext4 defaults 0 0

# 使用 ls -l /dev/disk/by-id 命令,查看弹性云硬盘的软链接

[root@fight ~]# ls -l /dev/disk/by-id

total 0

lrwxrwxrwx 1 root root 9 Mar 28 15:11 ata-QEMU_DVD-ROM_QM00002 -> ../../sr0

lrwxrwxrwx 1 root root 9 Mar 28 15:11 virtio-disk-drkhklpe -> ../../vdb

# 验证 检查 /etc/fstab 文件是否写入成功 无报错说明修改成功

mount -a

# 重启服务器,验证

reboot

# df -hl|grep dev

[root@fight ~]# df -hl|grep dev

devtmpfs 7.7G 0 7.7G 0% /dev

tmpfs 7.7G 24K 7.7G 1% /dev/shm

/dev/vda1 50G 9.7G 38G 21% /

/dev/vdb 98G 5.0G 88G 6% /data修改服务器默认设置

修改hostname

vim /etc/hostname

vim /etc/hosts

# 添加 127.0.0.1 fight fight关闭防火墙

systemctl stop firewalld.service

systemctl disable firewalld.service关闭selinux

SELinux 的结构及配置非常复杂,而且有大量概念性的东西,要学精难度较大。很多 Linux 系统管理员嫌麻烦都把 SELinux 关闭了,这里为防止安装过程中出现未知问题,先关闭掉。

#临时关闭

$setenforce 0

$getenforce

Permissive

#永久关闭,需要重启服务器

$vi /etc/sysconfig/selinux

SELINUX=disabled更改文件限制

$vi /etc/security/limits.conf

* soft nofile 65535

* hard nofile 65535

# 注意有一个*,这是需要的,文件末尾添加调整系统参数

$vi /etc/sysctl.conf

vm.max_map_count=262144

$sysctl -p重启

reboot安装K8S

安装sealos 4.3.7

如果安装了docker需先卸载

# 查看系统架构

lscpu | grep Architecture

[root@fight k8s]# lscpu | grep Architecture

Architecture: x86_64如果输出结果中包含x86_64或i686,则表示[系统]的 CPU 架构是AMD(或者是 x86 架构的 Intel

CPU。如果输出结果中包含armv7l、aarch64或arm64,则表示系统的 CPU 架构是ARM。

# 下载

wget https://mirror.ghproxy.com/https://github.com/labring/sealos/releases/download/v4.3.7/sealos_4.3.7_linux_amd64.tar.gz \

&& tar zxvf sealos_4.3.7_linux_amd64.tar.gz sealos && chmod +x sealos && mv sealos /usr/bin

# 验证

sealos version安装集群

https://sealos.run/docs/self-hosting/lifecycle-management/quick-start/install-cli

sealos run registry.cn-shanghai.aliyuncs.com/labring/kubernetes:v1.27.12 \

--env criData=/data/k8s/containerd \

registry.cn-shanghai.aliyuncs.com/labring/helm:v3.9.4 \

registry.cn-shanghai.aliyuncs.com/labring/cilium:v1.13.4 \

--single

# --env criData 指定k8s镜像位置,不要放在系统盘

# cilium 充当K8S的网络插件,不能忽略,不然集群Node报错,CNI plugin not installed

# helm 安装工具

# 如果安装过程中有问题,可直接清空集群

sealos reset --force=true

# 查看集群节点状态

[root@fight ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

fight Ready control-plane 27h v1.27.12

# 查看POD状态

kubectl get pods --all-namespaces

# kubectl设置命令自动补全

yum install -y bash-completion

source <(kubectl completion bash)修改镜像默认保存位置

安装时未指定containerd路径时操作

ll /etc/containerd/

# /etc/containerd/ 目录可能已存在

mkdir /etc/containerd/

cd /etc/containerd/

containerd config default > /etc/containerd/config.toml

vim /etc/containerd/config.toml

# 默认文件中 root被注释;遇到cri相关的报错,disabled_plugins = ["cri"] 这句可注释掉

root = "/container/containerd"

cp -ra /var/lib/containerd /container/

systemctl restart containerd

systemctl status containerd

# 检查位置是否已变更

crictl infosealos 常用命令

# 增加节点

sealos join --node 192.168.0.6

sealos join --node 192.168.0.6 --node 192.168.0.7

sealos join --node 192.168.0.6-192.168.0.9 # 或者多个连续IP

# 删除指定master节点

sealos clean --master 192.168.0.6

sealos clean --master 192.168.0.6 --master 192.168.0.7

sealos clean --master 192.168.0.6-192.168.0.9 # 或者多个连续IP

# 删除指定node节点

sealos clean --node 192.168.0.6

sealos clean --node 192.168.0.6 --node 192.168.0.7

sealos clean --node 192.168.0.6-192.168.0.9 # 或者多个连续IP异常情况处理

#查看节点污点

$kubectl describe node|grep -E "Name:|Taints:"

Name: fight

Taints: node-role.kubernetes.io/master:NoSchedule

#去除节点污点

$kubectl taint node fight node-role.kubernetes.io/master-

node/fight untainted

#查看节点污点

$kubectl describe node|grep -E "Name:|Taints:"

Name: fight

Taints: <none>镜像加速

mkdir /etc/containerd/certs.d/docker.io -p

cat > /etc/containerd/certs.d/docker.io/hosts.toml << EOF

server = "https://docker.io"

[host."https://md6rxzuc.mirror.aliyuncs.com"]

capabilities = ["pull", "resolve"]

[host."https://dockerproxy.com"]

capabilities = ["pull", "resolve"]

[host."https://docker.m.daocloud.io"]

capabilities = ["pull", "resolve"]

[host."https://reg-mirror.qiniu.com"]

capabilities = ["pull", "resolve"]

[host."https://registry.docker-cn.com"]

capabilities = ["pull", "resolve"]

[host."http://hub-mirror.c.163.com"]

capabilities = ["pull", "resolve"]

EOF

systemctl daemon-reload && systemctl restart containerd安装kubecm

curl -Lo kubecm.tar.gz https://github.com/sunny0826/kubecm/releases/download/v0.27.1/kubecm_v0.27.1_Linux_x86_64.tar.gz && tar -zxvf kubecm.tar.gz kubecm && mv kubecm /usr/local/bin/

# linux & macos

tar -zxvf kubecm.tar.gz kubecm

cd kubecm &&

sudo mv kubecm /usr/local/bin/

# 验证

kubecm list安装docker

此处不要用yum install docker的默认版本,否则将docker挂载的k8s的jenkins容器的时候会报错

移除旧的版本

$ sudo yum remove docker \

docker-client \

docker-client-latest \

docker-common \

docker-latest \

docker-latest-logrotate \

docker-logrotate \

docker-selinux \

docker-engine-selinux \

docker-engineyum list installed|grep docker

# 如果有

yum remove docker-ce-cli.x86_64

yum remove docker-scan-plugin.x86_64安装一些必要的系统工具

sudo yum install -y yum-utils device-mapper-persistent-data lvm2添加软件源信息

sudo yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo更新 yum 缓存

sudo yum makecache查看可用版本的 Docker-ce

yum list docker-ce --showduplicates | sort -r安装指定版本的docker-ce

yum install docker-ce-20.10.24-3.el8 docker-ce-cli-20.10.24-3.el8 containerd.io -y修改docker 保存位置

mkdir -p /data/docker/data

#更改docker存储目录

1、修改配置

$vim /usr/lib/systemd/system/docker.service

ExecStart=/usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock --graph=/data/docker/data

2、拷贝

$cp -ra /var/lib/docker /data/docker/data

3、然后重启docker

$systemctl daemon-reload

$systemctl restart docker

4、查看

$docker infodocker 镜像加速

sudo mkdir -p /etc/docker

sudo tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["https://md6rxzuc.mirror.aliyuncs.com"]

}

EOF

sudo systemctl daemon-reload

sudo systemctl restart docker安装docker compose

sudo curl -L "https://github.com/docker/compose/releases/latest/download/docker-compose-$(uname -s)-$(uname -m)" -o /usr/local/bin/docker-compose

sudo chmod +x /usr/local/bin/docker-compose

sudo ln -s /usr/local/bin/docker-compose /usr/bin/docker-compose

docker-compose --version

[root@fight bin]# docker-compose --version

Docker Compose version v2.26.0安装MySQL

version: '3.1'

services:

mysql:

image: mysql:8.0

restart: always

container_name: mysql

environment:

- MYSQL_ROOT_PASSWORD=XXXXX

- TZ=Asia/Shanghai

volumes:

- /data/docker-compose/mysql/data:/var/lib/mysql

- /data/docker-compose/mysql/conf/my.cnf:/etc/my.cnf

ports:

- 3306:3306

network_mode: bridge#创建data目录

$mkdir -p /data/docker-compose/mysql/data

#创建my.cnf

$vim my.cnf

[mysqld]

user=mysql

default-storage-engine=INNODB

#character-set-server=utf8

#character-set-client-handshake=FALSE

character-set-server=utf8mb4

collation-server=utf8mb4_unicode_ci

lower_case_table_names = 1

#init_connect='SET NAMES utf8mb4'

[client]

#utf8mb4字符集可以存储emoji表情字符

default-character-set=utf8mb4

[mysql]

default-character-set=utf8mb4

# 创建MySQL

$docker-compose -f docker-compose.yaml up -d安装Nginx Ingress

版本对照

https://docs.nginx.com/nginx-ingress-controller/technical-specifications/

helm 安装

# 离线

helm pull oci://ghcr.io/nginxinc/charts/nginx-ingress --untar --version 1.1.3

cd nginx-ingress

# 修改values.yaml 改 hostNetwork: true 开启-enable-snippets=true 使用代码块

# 单机不使用云服务商的负载均衡功能时,需启用本地网络ingress拦截 ****注意****

kubectl create namespace nginx-ingress

helm install nginx-ingress . -n nginx-ingress

# 验证

kubectl get all -n nginx-ingress

# 此种网络模式下 80 443 端口应该都占用了

ss -tuln|grep 80

ss -tuln|grep 443

# 卸载

helm uninstall nginx-ingress -n nginx-ingress旧版离线安装(不推荐)

# https://docs.nginx.com/nginx-ingress-controller/installation/installation-with-manifests/

git clone https://github.com/nginxinc/kubernetes-ingress.git --branch v2.4.2

$ cd kubernetes-ingress/deployments

$ kubectl apply -f common/ns-and-sa.yaml

$ kubectl apply -f rbac/rbac.yaml

$ kubectl apply -f rbac/ap-rbac.yaml

$ kubectl apply -f rbac/apdos-rbac.yaml

$ kubectl apply -f common/default-server-secret.yaml

$ kubectl apply -f common/nginx-config.yaml

$ kubectl apply -f common/ingress-class.yaml

$ kubectl apply -f common/crds/k8s.nginx.org_virtualservers.yaml

$ kubectl apply -f common/crds/k8s.nginx.org_virtualserverroutes.yaml

$ kubectl apply -f common/crds/k8s.nginx.org_transportservers.yaml

$ kubectl apply -f common/crds/k8s.nginx.org_policies.yaml

$ kubectl apply -f daemon-set/nginx-ingress.yaml设置默认ingressClass

# 查看ingressClass

kubectl get ingressclass

# [root@jdpai ingress]# kubectl get ingressclass

NAME CONTROLLER PARAMETERS AGE

nginx nginx.org/ingress-controller <none> 48m

# 设置默认

kubectl edit ingressClass nginx

# 文件中annotations添加内容

# ingressclass.kubernetes.io/is-default-class: "true"部署nginx-ingress的时候,查看common/ingress-class.yaml 放开注释

apiVersion: networking.k8s.io/v1

kind: IngressClass

metadata:

name: nginx

# annotations:

# ingressclass.kubernetes.io/is-default-class: "true"

spec:

controller: nginx.org/ingress-controller验证

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

selector:

matchLabels:

app: nginx

replicas: 1

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:latest

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: nginx-service

spec:

selector:

app: nginx

ports:

- protocol: TCP

port: 80

targetPort: 80

type: ClusterIP

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: nginx-ingress

spec:

rules:

- host: ddup.live

http:

paths:

- pathType: Prefix

path: /

backend:

service:

name: nginx-service

port:

number: 80执行kubectl apply -f test.yaml

浏览器访问ddup.live

生效即成功:beer::beer::beer::beer::beer::beer:

安装Kuboard

这里采用docker安装,官方推荐方式,集群安装时在K8S较高版本遇到端口号被占用的情况。

sudo docker run -d \

--restart=unless-stopped \

--name=kuboard \

-p 31080:80/tcp \

-p 10081:10081/tcp \

-p 10081:10081/udp \

-e KUBOARD_ENDPOINT="http://172.21.0.7:31080" \

-e KUBOARD_AGENT_SERVER_UDP_PORT="10081" \

-e KUBOARD_AGENT_SERVER_TCP_PORT="10081" \

-v /data/docker/kuboard/data:/data \

eipwork/kuboard:v3

# 执行脚本配置路由转发

# 创建命名空间

kubectl create namespaces kuboard

# 创建secret

kubectl create secret tls kuboard.ddup.live \

--cert=/data/web/ssl/kuboard.XXX.live_nginx/kuboard.XXX.live_bundle.crt \

--key=/data/web/ssl/kuboard.XXX.live_nginx/kuboard.XX.live.key \

-n kuboardyaml文件内容

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

nginx.org/server-snippets: |

location / {

proxy_pass http://172.21.0.7:31080/; # 替换成你的 Kuboard IP 地址和端口,应该是 IP 地址,而不是 KUBOARD_ENDPOINT 参数的值

client_max_body_size 10m;

gzip on;

}

location /k8s-ws/ {

proxy_pass http://172.21.0.7:31080/k8s-ws/; # 替换成你的 Kuboard IP 地址和端口

proxy_http_version 1.1;

proxy_pass_header Authorization;

proxy_set_header Upgrade "websocket";

proxy_set_header Connection "upgrade";

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

# proxy_set_header X-Forwarded-Proto https; # 如果您在反向代理上启用了 HTTPS

}

location /k8s-proxy/ {

proxy_pass http://172.21.0.7:31080/k8s-proxy/; # 替换成你的 Kuboard IP 地址和端口

proxy_http_version 1.1;

proxy_pass_header Authorization;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection $connection_upgrade;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

# proxy_set_header X-Forwarded-Proto https; # 如果您在反向代理上启用了 HTTPS

gzip on;

}

error_page 404 /404.html;

location = /40x.html {

}

error_page 500 502 503 504 /50x.html;

location = /50x.html {

}

name: kuboard-v3

namespace: kuboard

spec:

ingressClassName: nginx

rules:

- host: kuboard.XXXX.live

tls:

- hosts:

- kuboard.XXX.live

secretName: kuboard.XXX.livekubectl apply -f kuboard-igress.yamlkuboard导入集群

选择agent方式导入,尝试了使用cat ~/.kube/config的配置,但失败了

集群方式安装

下载yaml

wget https://addons.kuboard.cn/kuboard/kuboard-v3.yaml执行yaml

kubectl apply -f kuboard-v3.yaml查看pod状态,等待所有pod就绪

[root@k8s1 k8s]# kubectl get pods -n kuboard

NAME READY STATUS RESTARTS AGE

kuboard-agent-2-7697b8bc5c-6svjx 1/1 Running 2 2m2s

kuboard-agent-8575fb6585-26rk9 1/1 Running 2 2m2s

kuboard-etcd-5bv27 1/1 Running 0 4m52s

kuboard-etcd-pvf45 1/1 Running 0 4m52s

kuboard-etcd-sfbr8 1/1 Running 0 4m52s

kuboard-questdb-86dbbd7774-6fqdz 1/1 Running 0 2m2s

kuboard-v3-59ccddb94c-rlcpf 1/1 Running 0 4m52s

访问kuboard

#确保公网ip和30080的端口是开放访问的

在浏览器中打开链接 http://XXX.XXX.53.92:30080

输入初始用户名和密码,并登录

用户名: admin

密码: Kuboard123

登陆后,修改密码安装typecho

数据库初始化

CREATE DATABASE typecho;

CREATE USER 'xxc2hoRoot'@'%' IDENTIFIED BY 'zt%xxxxx';

GRANT ALL PRIVILEGES ON typecho.* TO 'xxc2hoRoot'@'%';

FLUSH PRIVILEGES;集群相关

kubectl create namespaces typecho

kubectl create secret tls https.XX.live \

--cert=/data/web/ssl/ddup.XXX/XX.live_bundle.crt \

--key=/data/web/ssl/ddup.XXX/XX.live.key \

-n typecho

---

apiVersion: v1

data:

TIMEZONE: Asia/Shanghai

TYPECHO_DB_CHARSET: utf8mb4

TYPECHO_DB_DATABASE: typecho

TYPECHO_DB_HOST: 172.21.0.7

TYPECHO_DB_PASSWORD: zt%XXXX

TYPECHO_DB_USER: XXXX

TYPECHO_SITE_URL: 'http://XXX.live'

TYPECHO_USER_MAIL: XXX@qq.com

TYPECHO_USER_NAME: XXXXX

TYPECHO_USER_PASSWORD: XXXX

kind: ConfigMap

metadata:

name: typecho-config

namespace: typecho

# 环境变量貌似屁用没有,安装的时候还得重新设置一遍

kubectl apply -f typecho-config.yamldeploymen、service、ingress

---

apiVersion: apps/v1

kind: Deployment

metadata:

annotations: {}

labels:

k8s.kuboard.cn/name: typecho

name: typecho

namespace: typecho

spec:

replicas: 1

selector:

matchLabels:

k8s.kuboard.cn/name: typecho

template:

metadata:

creationTimestamp: null

labels:

k8s.kuboard.cn/name: typecho

spec:

containers:

- envFrom:

- configMapRef:

name: typecho-config

image: 'joyqi/typecho:nightly-php7.4-apache'

imagePullPolicy: IfNotPresent

name: typecho

ports:

- containerPort: 80

name: http

protocol: TCP

resources: {}

volumeMounts:

- mountPath: /app/usr

name: volume-hwdk8

dnsPolicy: ClusterFirst

restartPolicy: Always

volumes:

- hostPath:

path: /data/k8s/typecho/data

type: DirectoryOrCreate

name: volume-hwdk8

---

apiVersion: v1

kind: Service

metadata:

annotations: {}

labels:

k8s.kuboard.cn/name: typecho

name: typecho

namespace: typecho

resourceVersion: '5717'

spec:

ports:

- name: j4kbrd

port: 80

protocol: TCP

targetPort: 80

selector:

k8s.kuboard.cn/name: typecho

sessionAffinity: None

type: ClusterIP

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

labels:

k8s.kuboard.cn/name: typecho

name: typecho

namespace: typecho

spec:

ingressClassName: nginx

rules:

- host: ddup.live

http:

paths:

- backend:

service:

name: typecho

port:

number: 80

path: /

pathType: Prefix

tls:

- hosts:

- ddup.live

secretName: https.ddup.live

status:

loadBalancer: {}

主题选择

https://github.com/HaoOuBa/Joe

5 条评论

情感真挚自然,字里行间传递出强烈的感染力。

这是一篇佳作,无论是从内容、语言还是结构上,都堪称完美。

创新略显不足,可尝试引入多元视角。

?实用类评语?

作者对主题的挖掘深入骨髓,展现了非凡的洞察力和理解力。